(Update: It’s been only a little over six months since I wrote this, and OpenAI’s Sora does a very impressive job addressing my list of complaints below. Once I get a chance to use it, I’ll add my thoughts here.)

RunwayML Gen-2 can generate video from a text prompt

Wait, that’s basically what I do! A client sends me a script/description, and I return a video/animation… hmmm…

I have no doubt that someday everyone’s title will be Creative Director, but after playing around with Gen-2, that future still seems quite far away.

Neat toy you got there

Here is my current list of complaints regarding RunwayML Gen-2 (these may seem obvious):

- Poor video specs (low framerate, low resolution, grainy image)

- TRT is currently limited to four seconds

- Doesn’t accurately follow my prompts

That last one is a dealbreaker when talking about professional applications. Maybe if I spent LOTS of time learning the ins and outs of prompt engineering for this specific tool, I could bend it to my will, but I’m skeptical. Then they’ll update the model and I’ll have to learn new ins and outs. But even if it did accurately generate what I had in my head, the poor specs and 4-second TRT greatly limit the kinds of projects for which it would be useful.

One anecdote

Here’s a looping animation I made with Cinema4D:

As a test, I tried to recreate this using Gen-2. Here are some of the results:

Try as I might, I couldn’t get Gen-2 to make the hourglass flip or have large blue/teal/white beads or be centered in the frame. I couldn’t even make it look like a decent hourglass… If anyone out there is able to successfully recreate something even close to my C4D animation, I’d love to know what prompt you used.

But didn’t Disney just recently use AI to make a Marvel title sequence?

Yes, they sure did (and a lot of people aren’t happy).

However, upon viewing the title sequence in question (which I sadly can’t share here because there are currently no good copies on YouTube), you will see that it features the low frame rates and grainy image quality I complained about above. And that’s fine! The people who made this opener leaned into the AI tools and made something that I think works with the subject matter. But in my opinion, this specific title sequence exists more as the exception that proves the rule (with the rule being that current text-to-video tools have very limited utility) rather than evidence that motion design is a dead-end career path. Btw: motion designers and other humans were still used in the making of this opener, of course…

To me, the backlash over this opener seems more motivated by emotion than a practical fear of reduced job security. Many artists rightly feel that humans should be at the center of what we call “art.” But that’s basically a tautology: human art is made by humans.

But are we really talking about “art” here? As much as I respect the talent of people who work on awesome main title sequences, I find it just plain silly to lament the fact that real human “artists” didn’t draw the lizard animations for the Secret Invasion opener. I’ve dedicated most of my adult life to the creation of visually pleasing imagery, yet I will readily admit that what I do to pay the bills isn’t exactly art. Design and art are two very different things, and designers have always had to update their toolkits to stay useful.

A better example, perhaps

I find this example of generative AI to be much more interesting:

Pretty impressive! It’s kind of wonky in places, but one can imagine the kinks being worked out soon enough. Alas, it appears hand-drawn animation has no future… millions will be out of work!**

Except then you watch the tutorial they provide for how to do this. And you realize it involved a lot of real, no-foolin’ WORK. First, they had to do a live-action shoot. Then, they had to train models, feed the shots through Stable Diffusion, and deal with lots of trial and error. Then compositing. Sound design. Etc. All things that humans still get paid to do.

Don’t get me wrong; the technology will keep improving. Someday only truly passionate artists (or highly paid specialist designers) will still draw things or make keyframes in [future animation software] by hand. But for now – in the real world where clients have very specific needs and having precise control over your end product is of utmost importance – generative AI isn’t as useful as it first appears.

**Note: Disney closed their traditional animation department nearly 20 years ago, laying off 250 people. Outside of anime (which generally employs a mix of animation techniques), hand-drawn animation has been nearly dead for a while, and it never employed all that many people.

So where does that leave me?

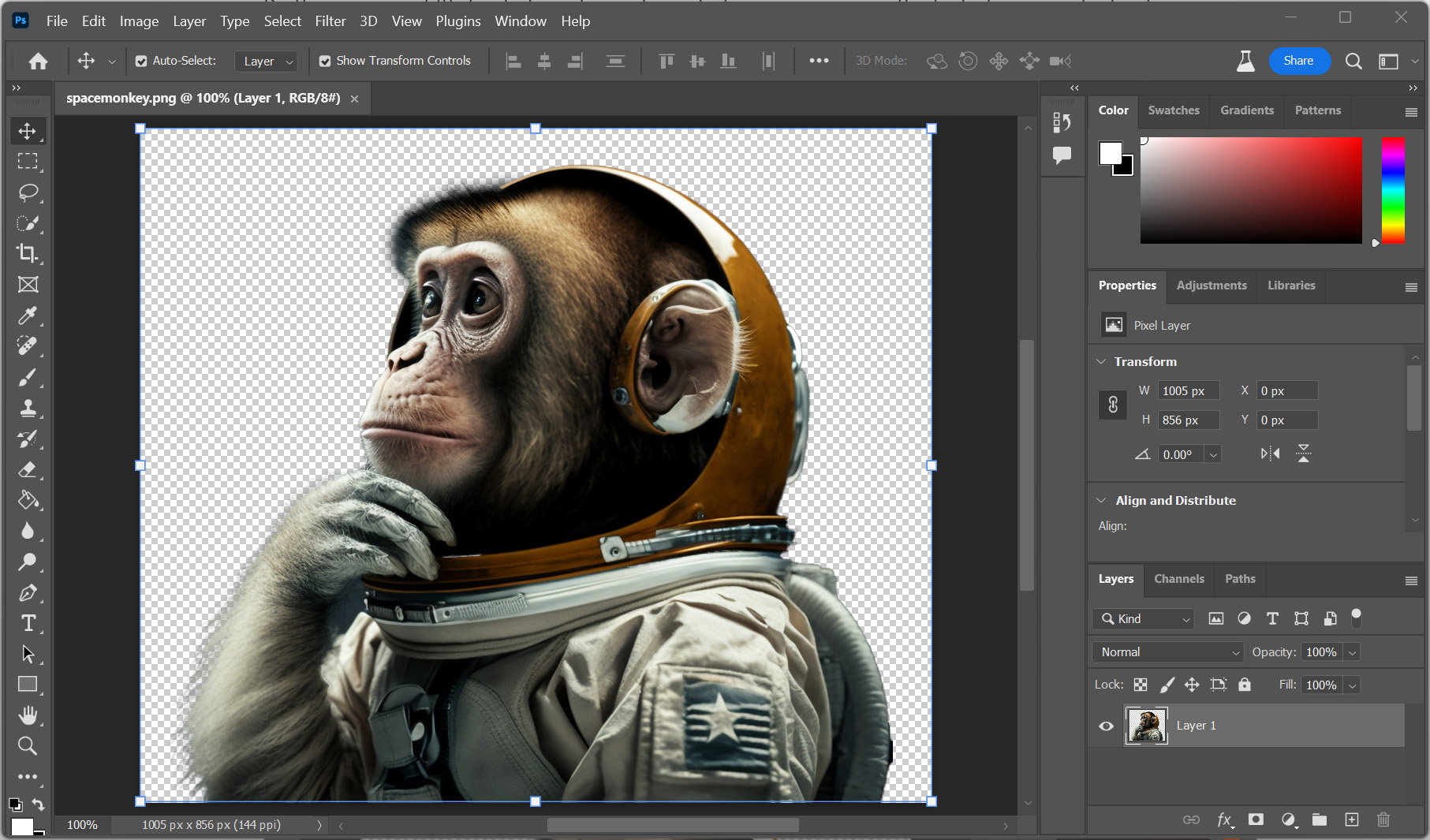

I use “AI” tools every day. Generative-fill and automatic subject selection in Photoshop are huge time-savers. Premiere Pro can transcribe and create closed-captions in seconds, which used to be mind-numbing work that I’m happy to never again get paid to do. Midjourney is handy when I can’t find the right stock image (need a monkey wearing a space suit doing “the thinker”? Done). And I’m sure I’ll find a use for RunwayML’s text-to-video service. It will just be one more tool on my toolbelt.

Ultimately, though, one has to ask: will the things they pay me to do today be automated in N years? And to that question, I answer: probably! Most of it probably will!

So between now and [now+N], what is the best move for someone like me? Well, I could freak out and say “shame on you” to the people making & using the tools that will change my job. I could hyperbolically proclaim that “this AI-generated opener is the worst opener in the history of openers!” Or I could inspect the tools, kick their tires a bit, see what other people are doing with them, and keep it all in mind when starting a new project.

I firmly believe that if I follow that strategy, I will play a useful human role in whatever my job looks like in N years.